In O2 we minimized the number of available partitions (or queues) to simplify the job submission process.

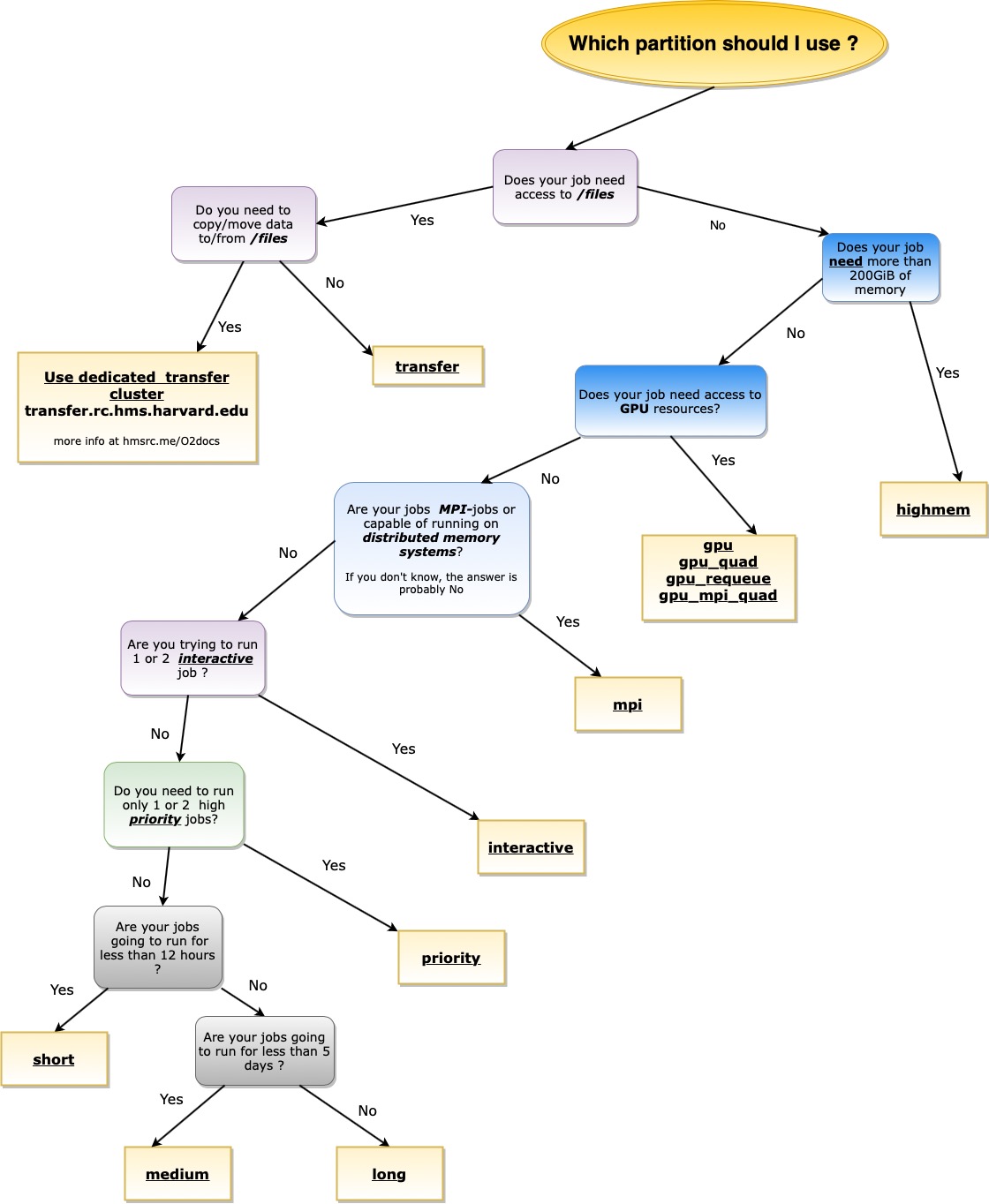

You can use the flow chart below to determine which partition is best for the jobs you plan to run.

Note 1: all partitions can currently be used to request interactive sessions; the interactive partition has a dedicated set of nodes and higher priority.

Note 2: all partitions can run single or multi-core jobs. For more information about parallel jobs in O2 read our dedicated wiki page

Details about the available partitions are reported in the table below

Partition | Job Type | Priority | Max cores per job | Max runtime limit | Min runtime limit | Notes |

|---|---|---|---|---|---|---|

interactive | interactive | 14 | 20 | 12 hours | n/a | 2 job limit, 20 core/job limit, 250GiB / job memory limit, default memory 4GB |

short | batch & interactive | 12 | 20 | 12 hours | n/a | 20 core/job limit, 250GiB / job memory limit |

medium | 6 | 20 | 5 days | 12 hours | 20 core/job limit, 250GiB / job memory limit | |

long | 4 | 20 | 30 days | 5 days | 20 core/job limit, 250GiB / job memory limit | |

mpi | 12 | 640 | 5 days | n/a | invite-only. Email rchelp | |

priority | 14 | 20 | 30 days | n/a | limit 2 jobs running at once, 20 core per job limit 250GiB / job memory limit | |

transfer | n/a | 4 | 5 days | n/a | limit of a 5 concurrently cores per user for transfers between O2 and See File Transfer for more information. Invite-only. Email rchelp. | |

gpu, gpu_quad | n/a | 20 | 5 days | n/a | additional limits apply to the GPU partition, see the Using O2 GPU resources page for more details | |

gpu_mpi_quad | n/a | 240 | 5 days | n/a | invite-only. Email rchelp | |

gpu_requeue | n/a | 20 | 5 days | n/a | see O2 GPU Re-Queue Partition for additional details | |

highmem | n/a | 16 | 5 days | n/a | invite-only. Email rchelp |

Although Slurm interprets units as KiB, MiB, and GiB in the background, O2 users must use K, M, or G as valid units for the memory ( |

There is a limit on the total CPU-hours that can be reserved by a single lab at any given time. This limit was introduced to prevent a single lab from locking down a large portion of the cluster for extended periods of time. This limit will become active only if multiple users in the same lab are allocating a large portion of the O2 cluster resources. This can for example happen if few users have thousands of multi-day or hundreds of multi-week running jobs. When this limit becomes active the remaining pending jobs will display the message AssocGrpCPURunMinute.

Note: The "gpu" partition has additional limits that might trigger the above message, for more details about the "gpu" partition please refer to the "Using O2 GPU resources" wiki page

There is a limit on the total CPU-hours that can be reserved by a single lab at any given time. This limit was introduced to prevent a single lab from locking down a large portion of the cluster for extended periods of time. This limit will become active only if multiple users in the same lab are allocating a large portion of the O2 cluster resources. This can for example happen if few users have thousands of multi-day or hundreds of multi-week running jobs. When this limit becomes active the remaining pending jobs will display the message AssocGrpCPURunMinute.

Note: The "gpu" partition has additional limits that might trigger the above message, for more details about the "gpu" partition please refer to the "Using O2 GPU resources" wiki page